Artificial intelligence (AI) agents have exploded in popularity. Even though the technology is still relatively new, it’s already stirred up a mix of curiosity and concern. You’ve probably heard or asked questions like: Is this just a passing trend? Is it taking credit for real human work? Can it actually produce anything good? Could it replace me? There’s no shortage of skepticism and, I’ll admit, I shared a lot of it.

If you’re like me and are just now considering one of these tools for coding, this blog post is for you.

My Experiment

At 219 Design, we don’t use AI for confidential client work, so I hadn’t had much hands-on experience with it. While we use AI to assist with tasks like internal Google Scripts, marketing, creating spreadsheets, etc., I wanted to push further by asking: How would an AI agent perform when integrated with a full C++ project? I made a point to stay as hands-off as possible to really see if the agent could save me time and deliver code that holds up.

I went into this experiment with a heavy dose of doubt. My main concern was that it wouldn’t actually save time. I figured I’d actually spend more time crafting just the right prompts to get a usable response, digging through generated code to fix odd bugs, and ultimately, doing the work myself anyway. The reality surprised me.

A Practical Example

After some initial experimenting and getting used to using an AI agent, I really wanted to answer these questions:

- How much could I accomplish in a day with the AI agent?

- What sort of program could it help me with?

I decided to have it write an OpenGL 3D renderer. Renderers have a whole lot of setup code just to draw a triangle in a window. The agent was able to do this with one query: “Add an OpenGL window and display a triangle.” Done!

I kept going, still being as hands-off as possible. As I added features, most of the time it produced code that compiled and worked. Sometimes, I had to fix a few things with follow-up queries. And, it was also able to point out free, open source libraries that were helpful.

The agent was great at adding boilerplate features, such as setting up. Eventually I started requesting features that required more cleverness, and this is where the agent faltered more often.

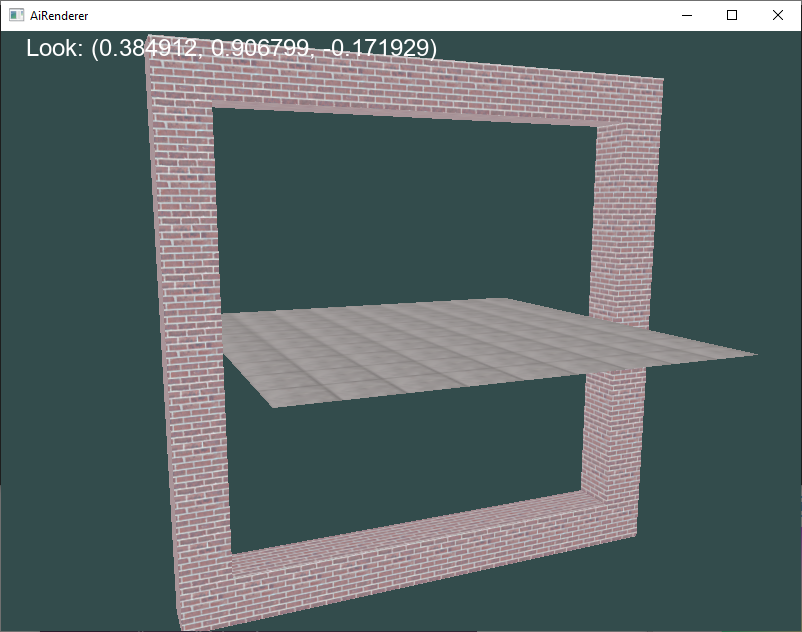

Sometimes you get what you ask for!

Prompt: Put a wall around the floor

By the end of one work day, I was able to get the engine to have the following:

- Rendering simple 3D shapes

- Camera control

- Text rendering

- Loading of maps

- Collision detection

- Partway through a HUD

Here’s a quick video of the results:

How to Talk to an Agent

Learning to Speak Prompt Language

Crafting effective prompts is very much like learning a new language. The more you work with AI agents, the better you get. Early on, some of my results were underwhelming simply because I wasn’t asking the right questions. Sometimes, I didn’t even fully understand what I wanted, which made it hard to explain clearly. Just like with real people, if you ask something vague, you’re going to get a vague answer. Specificity matters.

In fact, working with the agent reminded me a bit of mentoring a junior developer (though a junior developer is much more capable than an AI!). You can choose to give very granular instructions like “make a new class,” “add an integer here,” “loop through this list” but doing so isn’t productive. Whether you’re managing a junior dev or prompting an AI, spoon-feeding every step tends to waste more time than it saves.

On the flip side, being overly broad doesn’t help either. Asking the agent to “make an Android app” or “build a full real-time strategy game” is likely to get you incomplete or incoherent results. Like a junior dev, the AI will do its best, but you can’t expect miracles from an open-ended prompt.

The Sweet Spot

Over time, I found the sweet spot was just slightly pushing the boundaries of what the AI could reasonably handle. Start small, figure out where it performs well, then gradually give it more responsibility. Sometimes the agent will surprise you. It might make smart connections or deliver code that’s 95% correct. At that point, a quick follow-up query is often enough to clean it up.

As an example, in my 3D renderer, I asked the agent to parse a text file. It recognized that the file was a map file for where to place the walls and took extra steps that I thought I’d need more prompts to do. The map loading, and rendering based on the map, was the fastest feature to implement.

The HUD, which I couldn’t finish before the end of my one-day challenge, is a good example of a not-so-sweet spot. Maybe I just couldn’t word my prompts well enough, but the agent struggled to layout the HUD to my specifications.

Show & Tell

There were times when phrasing the right query felt genuinely difficult. This was especially true for visual programming tasks, like building UI or handling graphics, where it’s often easier to show than tell. This is why I chose to make a 3D renderer, because that type of program forces that challenge. But, unlike with a human developer, with the agent I could only tell. It’s challenging to verbalize visual or spatial concepts precisely. Since I challenged myself to be as hands-off as possible during this experiment, I ended up facing those tougher prompts head-on – ones I might have avoided otherwise. It made for a fascinating challenge.

How to Fix the Bugs

Of course, there were bugs

That’s to be expected with any coding. But what stood out when working with an AI agent is that debugging felt a lot like working with someone else’s code, so there’s a bit more challenge in figuring out what’s going on.

It’s still important to review the code the AI puts in and not blindly accept changes. Luckily, an agent like Cursor shows a diff before you accept. It was helpful that I did this in C++, a language I know very well, and I could spot code I didn’t like. A valid use of AI could be to help you write a program in a language you are not as familiar with, but it would be more difficult to debug. I didn’t do it in my experiment, but I imagine AI would be great for setting up unit tests!

Mostly Good Code, But…

Going in, I expected a lot of strange, AI-specific errors like bizarre syntax mistakes, code that wouldn’t compile, or logic that no human would reasonably write. Surprisingly, that wasn’t the case. Most of the time, the agent generated clean, syntactically correct code that compiled without issues. There were only a few oddities. One time it did something weird like put a for-loop outside of a function. Another time it wrote a function in PascalCase and tried calling it in camelCase. Overall, I didn’t have to worry about this.

In many cases, I could simply point out the bug to the agent, and it would recognize the problem and fix it immediately. Being able to point to the exact failing line made a big difference. Often, the agent would get 95% of the code right, with just one or two lines needing correction. In those situations, it was usually faster to fix the issue myself rather than go back and forth.

There were a few times, though, when the agent hit a wall. It would keep trying to fix a feature but never quite get it right, even after multiple iterations. When that happened, I had two options: either fix the issue myself or roll back the changes and try a different approach – usually by breaking the request into smaller, more specific prompts. Both strategies worked, but they depended on how much time I was willing to invest versus how close the AI was to getting it right.

Did the AI Agent Save Time?

In my experience, the answer is a definite yes, but with some caveats. If you’re micromanaging the agent, step by step, it may end up being faster to just write the code yourself. And, if you’re trying to implement something in a clever or unconventional way, the AI might not be able to follow your lead.

That said, AI agents really shine when it comes to writing boilerplate or tedious code – the kind of stuff that’s more about typing than thinking. We’ve all been there: you know exactly what needs to be written, and it’s just a matter of slogging through it. For those situations, the AI can be a huge time-saver. Whether it’s generating a Q_PROPERTY in a Qt widget, parsing a text file, or setting up a tree structure, the agent can handle it quickly and reliably – freeing you up to focus on the more interesting parts.

Does an AI Agent Produce Good Code?

My answer is a bit mixed. For the most part, especially when generating boilerplate or repetitive code, it does a good job. The syntax is clean, and the structure is usually sound. However, the agent tends to over-engineer solutions. It often produces more code than necessary to solve a relatively simple problem.

That said, when I pointed out these kinds of issues, the agent was quick to acknowledge them, often replying with something like “You’re right, that’s a better approach!” and then adjusting the code accordingly. So even if it didn’t get it right the first time, it could usually correct itself with a little guidance.

In one case, I asked the agent to look for bugs. It flagged something minor, not really a problem, and proposed a fix that involved creating an entirely new utility class along with several helper functions. If I had accepted the suggestion, it would have doubled the size of the codebase unnecessarily.

To be fair, that’s not all that different from how we write code ourselves: first drafts are rarely perfect, and refining the solution is part of the process. The AI agent is no exception.

My Conclusions

After spending time with the AI agent, I’m far less skeptical than I was at the start. In the right scenario, it can be a serious time-saver. The agent types faster than any human, and it’s more than capable at handling the parts of coding you’d rather not do. Where it really shines is in laying down the broad strokes of a feature – setting up the structure, scaffolding, or repetitive patterns. From there, I could jump in to refine the logic or handle the parts that are harder to articulate in a prompt.

Am I worried that AI will replace me? No. We’ve had class-adding wizards in IDEs for decades, and, at this point, AI agents are essentially wizards for more features. It still needs a real programmer to guide it.

For this experiment, I challenged myself to let the AI do most of the coding. For a real-world project, I probably wouldn’t lean on it quite as heavily, but it made me wonder: is this what “programming” will come to look like? I’ll admit, using the agent became a little addictive. Skipping the boring parts and jumping straight to the interesting ones was a refreshing change. If you haven’t tried it yet, I highly recommend giving it a shot!