“I think art can be part of the solution because art can inspire people to look at an issue they might otherwise ignore or reject. Art has the power to seduce, engage, and address our universal humanity in ways that create important conversations.”

— Shepard Fairey

The Influence of Media on the Perception of A.I.

Artificial intelligence is taking over the world. The world’s most powerful companies are funneling billions of dollars into developing new applications for artificial intelligence and the effects of these investments are already being felt in our everyday lives – in how we get to work, socialize with our friends, take care of our homes and more. However, developments in A.I. have not all been positive. Stories about machine learning algorithms developing their own creepy language, or about A.I.’s turning into super intelligent killer robots regularly appear in our news feeds. So which is it? Is A.I. going to enrich humanity? Or is it going to end up destroying the world?

There are strong opinions on both sides of this debate, each with piles of scientific papers and mathematical calculations supporting analyses of what A.I. is and how it will affect our lives. But these numbers and figures only form a part of the picture. A.I. is a nuanced subject that warrants a deeper exploration.

Through the years, humankind has turned to art to examine complex subjects through unexpected vantage points – artificial intelligence is a topic that provides particularly rich material for an artistic analysis. Art is one of our main conduits for expressing our human intellect and identity – but what might art look like when applied through an artificial mind?

Artificial Intelligence: A Group Pop-up Art Exhibit

Earlier this month, with the generous support of 219 Design, we were able to put together the Artificial Intelligence exhibit, which seeks to explore some of these heady questions. It primarily features tangible, multi-sensory explorations of A.I., created by artists and creatives working with technology. These pieces are immersive and interactive, blurring the lines between artist and audience, human and artificial intelligence.

Although interactive art pieces are only fully experienced in person, below is an attempt to distill the essence of each work:

Scanning An Artificial Brain, 2017

by Albert Lai and Purin Phanichphant

When you look at a photo of a cat, your retinas absorb light and send information through layers of neurons in your brain until you suddenly think ‘cat!’ In artificial neural networks, image data flows through layers of artificial neurons (mathematical representations instead of the messy stuff in your head) until it finally guesses: ‘cat with 99% probability.’ Each block in this visualization represents a layer of neurons in the neural network. These layers are arranged sequentially and you can see a vague outline of the original image in the first layer; this is because neurons in earlier layers tend to detect low-level details like edges, corners, colors, etc. (known as ‘features’ in computer vision) and will become excited when they see these features.

Lost In Google Translation, 2017

by Kevin Ho and Purin Phanichphant

Neither humans nor machines are perfect. While the Google Neural Machine Translation system can translate phrases across a wide range of languages instantaneously, something that extremely few humans are capable of, its accuracy might never be able to catch up with the subtleties of the human languages that it tries to work with. These discrepancies become evident when a phrase is first translated (in this case, into Thai), and then re-translated back into English. These shortcomings in both human’s and machine’s capabilities point to the future where both parties coexist and collaborate.

Type Space, 2017

by Kevin Ho and Purin Phanichphant

Type Space is an installation that explores what is possible when machine learning and design intersect.

Font selection can be a tricky task. There are so many different fonts to choose from, each with their own nuances and characteristics. By using a neural network to look at over 200 fonts, we created a space where the visual relationships between fonts can be explored. Fonts are grouped without any human intervention, giving a bit of insight into how neural networks see.

A.I. Duet, 2016

by Yotam Mann

This installation uses Machine Learning to let the audience play a duet with the computer. One can start playing some notes on the keyboard (without having to know how to play piano), and the computer will respond to the melody.

Instead of traditionally writing rules on how to respond to human key input, A.I. Duet was played tons of musical melodies. Over time, it makes fuzzy relationships between notes and timing and builds its own map based on the examples it is given.

A.I. Duet was built with Tensorflow, Tone.js, and open-source tools from Google’s Magenta project.

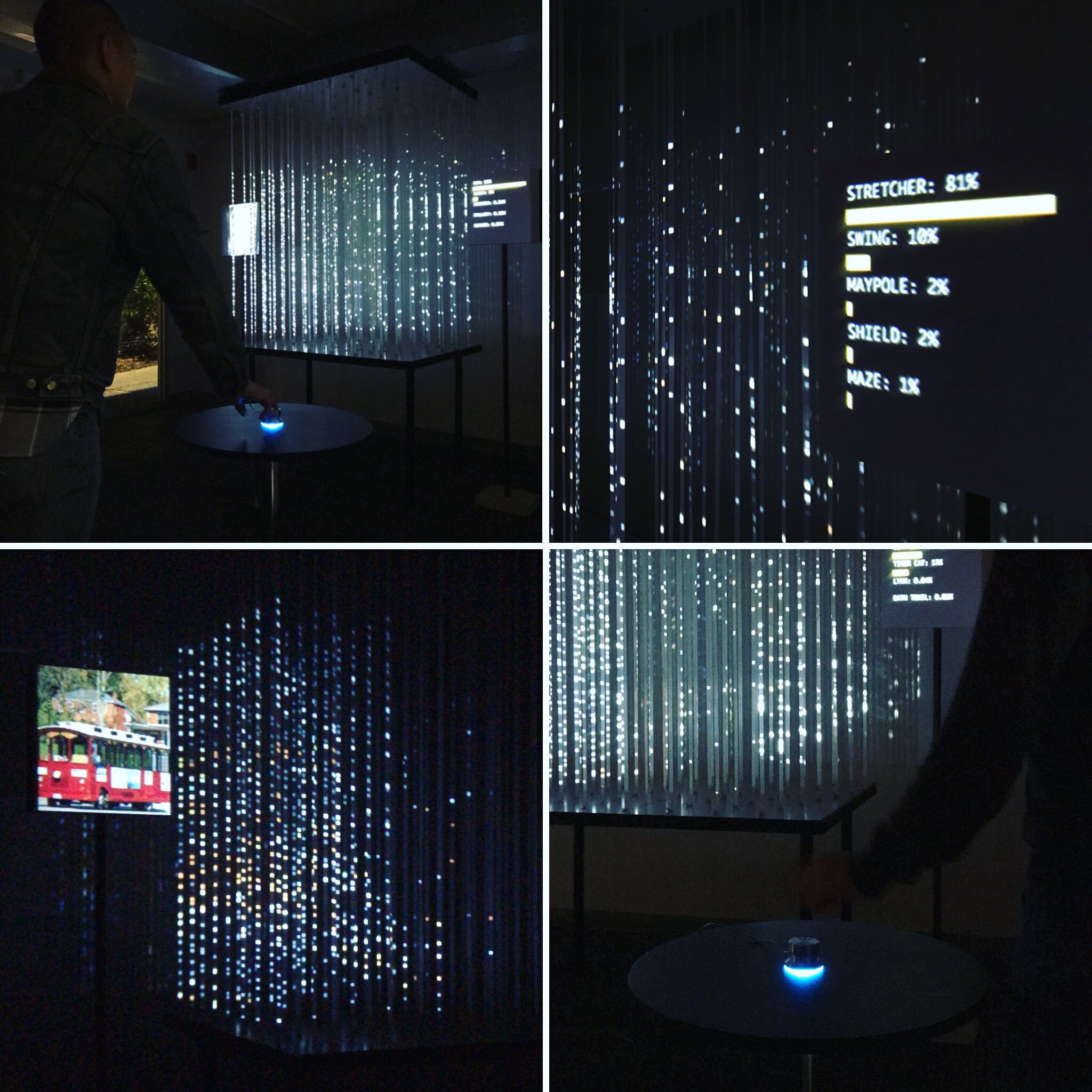

Deep Dream Vision Quest, 2016

by Gary Boodhoo

Deep Dream Vision Quest is a neural image synthesizer that creates multiplayer hallucinations and turns dreaming into a shared experience. The video installation shows the world to a neural network machine through a live camera. The machine reconstructs what it sees. We project that image back into the installation space. Until the machine detects motion, it dreams about the last thing it has seen. With each uninterrupted dream cycle the transformation of this memory becomes more extreme. Strange creatures emerge from alien landscapes. Only in stillness are they visible. They fade away when you move. This reflective “hurry up and wait” quality provides the basis for emergent gameplay.

Examining the Art in Artificial Intelligence

Over the duration of the pop-up exhibit (November 10-11, 2017), approximately 150 visitors experienced our artistic examinations of artificial intelligence. The audience, made up of business leaders, creatives, and next-generation technologists engaged in thought-provoking exchanges with various A.I.’s, and with one another. One visitor described his experience as “taking a peek into the A.I. black box,” while another remarked that the exhibit sparked new thoughts on how human and artificial intelligences might collaborate on creative tasks in the future. While our show may have been brief, it is clear that the impact of these explorations of the “art” in artificial intelligence will be long-lasting.