219 Design offers a 2-Day Workshop called Essential Modern Software Infrastructure for leaders looking for help setting up their software team for optimal success. Daniel Gallagher and I put together this handy 4-part guide on How to Build Your Software Infrastructure Team for people who want to do it themselves or get a sneak peek of what the workshop covers. In part one, we shared a few core principles we live by here at 219 Design that help us deliver great code. Parts two – four will cover practical applications of those principles across areas such as testing and processes. Included throughout are reading recommendations for further discussion on every topic.

Part Three: Practical Applications for Automation and Containers

Previously, in Part Two of this series, we discussed source control—which keeps your entire team in agreement about the current state of your software. Here, in Part Three, we discuss Automation and Containers, which help keep your team in agreement about the proper runtime and build-time environment(s) for your software.

Automation and Containers greatly increase the team’s confidence that what works for one team member will work equally well for all. (Not to mention: the confidence that it will work for your customers.) Never again will the team become hostage to “that one mysterious PC in the corner” which produces the only shippable version of your software for reasons that no remaining team member can explain.

Automation: Documentation < – > Automation

Goals: save time, remove human error, and create “executable documentation”

Software developers are notorious for neglecting documentation tasks. Often this stems from a very reasonable aversion to investing time in something that has little return on investment. Documentation written in human languages cannot be executed and tested the way we execute code written in formal programming languages. Like an automobile left sitting in the garage for many months, anything that isn’t tested regularly is unlikely to work when you need it.

The preceding ideas suggest that software developers would write more documentation if only that documentation were executable. Good news! For your software environments (e.g. deployment environments, testing environments, development environments), the growing trend of declarative infrastructure makes documenting your environment indistinguishable from building/executing that environment.

Any time a task can be scripted in a declarative style, we encourage you to embrace this approach, and to view such scripts as equal parts automation and documentation.

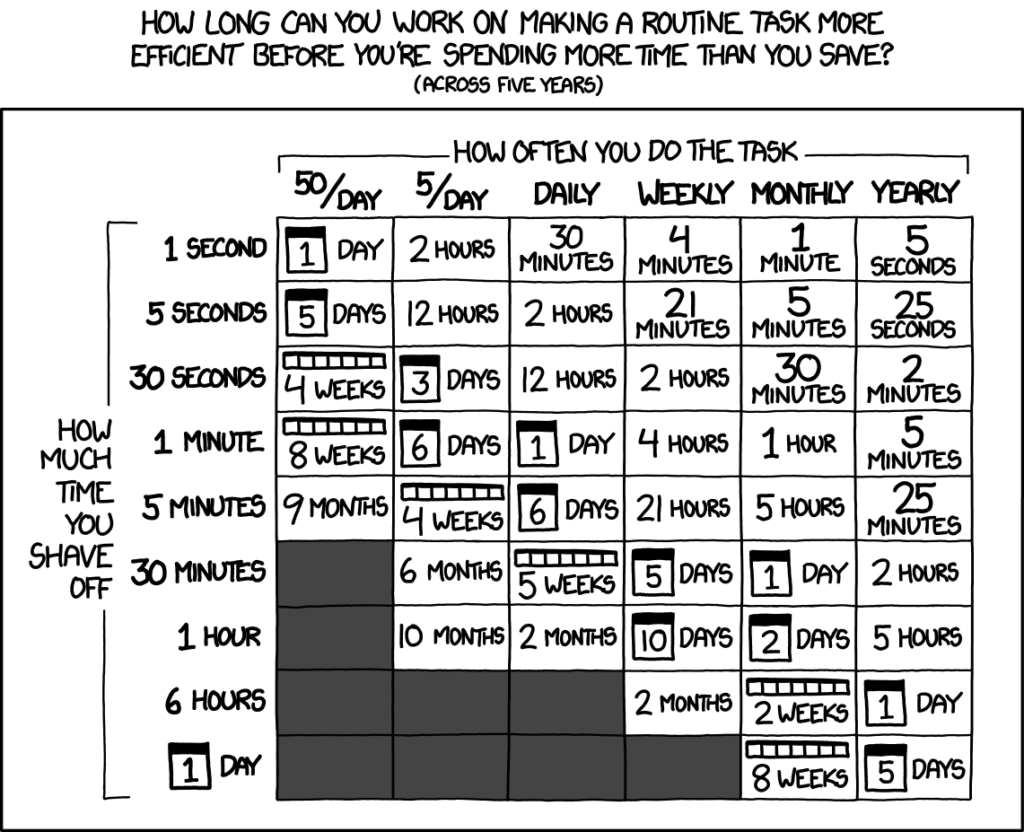

Automation is a spectrum and does not have to be done all in one go. Don’t get stuck in all-or-nothing thinking. The first time you perform a recurring task, make a list of the steps you took. The next time you repeat the task, try to devise executable commands for some of the steps and paste those commands into your original list. If, after some number of iterations, you find that all the steps now have copy-and-paste executable commands, then you can replace the initial document with a small script that will execute the series of commands all in one go.

Don’t forget to track these incremental improvements in version control (see part 2 of this series)!

Recommended Reading:

Automating: Builds

Goals: Repeatable, idempotent, self-documenting

For compiled languages, understanding your build process goes hand-in-hand with understanding the overall software design of your project. Module dependencies at build-time stem directly from dependencies in the abstract concepts of the high-level design. A clean conceptual design can lead to an optimized and maintainable build. Likewise, pain points in the build process can help you uncover unnecessary coupling in your software design.

Most IDEs have a way to tell you what command line they are running. It’s worth it to find that output and put it in a script. Then you can invoke the build any time you want, including from a Docker image.

Don’t tolerate ballooning build times. While it is an immutable fact that a “fresh build from nothing” is only subject to finite and limited speed-up, the same is not true for periodic incremental rebuilds. Insist on fast incremental rebuilds. To monitor, when you rebuild at the command line, watch what rebuilds. When you notice an object getting rebuilt that seems unrelated to your change, investigate. This is a sign that something is misconfigured and inappropriately coupled (either in the code or in the build configuration).

Grow your personal software toolkit with tools for visualizing C/C++ file dependencies. Here is one we use: Our wrapper of cinclude2dot

One last note on the topic of builds: for C/C++ be sure to always maximize warning level and treat warnings as errors!

What about compiler warnings from third-party code?

- Use compiler flag -isystem in place of -I (reference)

- Or use targeted suppression with pragma

Containers

When you first join a software project, it can be tempting to just install all the tools and dependencies of the project right onto whichever PC you have in front of you.

Such habits were the default practice until relatively recently. However, the current best practice is to first initialize a fresh Virtual Machine (VM) or a fresh container and then install the tools and dependencies inside this fresh environment.

Repeatability: Containers and VMs

Your build script gives you a repeatable build in the sense that it will invoke the same compilation steps in the same order each time. Note, however, that the compilation steps themselves rely on various compiler binaries being present on the system.

Your container (or, alternatively, your VM) will ensure the repeatability of installing those compiler binaries the same way for your whole team, thereby ensuring a repeatably perfect environment in which to run the repeatable build script.

Added Benefits:

- Eliminates the possibility of “cross-contamination” of compiler toolchains for different target hardware or for different languages (or different language version)

- New teammates can begin building the project near instantaneously

Repeatability: Docker for Development

The most popular tool for creating containers on Linux these days is Docker.

When people first encounter Docker containers, they commonly inquire about the differences and similarities between Virtual Machines (VMs) and containers.

VMs and containers both create a so-called “guest system” nested inside your outer “host system.” In the simplest case, when only one level of nesting exists, your “host system” can also be referred to as your “bare metal” system. The host is (generally) not a virtualized system, but a system running directly on bare metal.

In the case of a VM, the distinction between the host and the guest is much greater.

A container is “not really a VM”. What is it, then?

A docker container on Linux is a clever and useful combination of kernel namespaces, and a union filesystem.

Notably:

- various lib/ and bin/ folders can be “unioned” to those on your host system

- ps and top on the host show processes inside the container

- no need to reserve & assign CPU cores or RAM (unlike with a VM)

Repeatability: VMs for Development

Virtual machines also let you have a standard development environment. The following Pros/Cons can help you decide whether to reach for containers or VMs in any given situation.

VM Pros:

- Host and guest can be different OSes

- Minimal setup for new developer (other than downloading the VM image)

- Tool installation done once and saved to a “quickstart” image

Cons:

- Must pre-allocate CPU, RAM, and storage, so will be slower than native or Docker development

- Can get very large, and take a long time to download

When VM technology is your choice, please consider Vagrant to document and automate your VM setup.

Conclusion

If you’ve practiced our suggestions thus far, then you’ve seen that a bit of git discipline combined with a bit of infrastructure automation can pay steady dividends in team productivity.

Check out Part 4, in which we advocate for another essential practice: good testing methodology.Keep Calm and Automate On. And call us if you get flummoxed along the way!