X

Contact Us

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

You’ve probably heard the buzz about Virtual Reality and Deep Neural Networks. In this post, I’ll demonstrate one way the virtual user experience can be improved by an application that recognizes the letters of the alphabet written in the air using a Deep Neural Network.

Tracking the positioning and orientation of the user’s head and hands enables a wide variety of user interface designs. I created this application to explore the possibilities of applying machine learning to VR interactions in my work as a software engineer at 219 Design.

If you’ve ever had the chance to try the HTC Vive, you know that the standard methods for inputting text include

1) hunt and peck typing by pointing the controller at a virtual keyboard or 2) typing with your thumbs on a touch-sensitive surface of the controller.

Neither of these methods takes full advantage of Virtual Reality controls. Fully-tracked VR allows User Interface designers to create new kinds of UIs that can track the position and orientation of a person’s head and hands (unlike mice, keyboards, and touchscreens). I created a demo application that uses Deep Neural Networks to leverage this tracking to create a better user experience for inputting text into a VR application.

If you don’t know how neural networks operate, I suggest you watch this 1-minute video to better understand what they do when they’ve been trained or this hour-long series of videos on how they work overall.

This demonstration uses the HTC Vive to interact with a scene created in Unity 3D. It also uses TensorFlow to create a neural network model to map user input to an inferred letter of the alphabet.

Watch this demonstration to see how well the system works under ideal conditions.

These conditions are ideal because they are similar to the training data. However, the training data was generated by a person writing letters in mid-air continuously for an hour in the same orientation for every character, at a consistent speed, in relatively consistent stroke order and direction (which could give yougorilla arm). The user in the video was also the same user that generated the training data and used it in a relatively similar manner.

This is just the tip of the iceberg for what’s possible when leveraging neural networks in VR. Read more if you are interested in the technical details for this example or contact us for help with your project at getstarted@219design.com.

Next, we’ll be going over the technical details of the implementation and where it fails. Not all of the content in this section is intended to generalize to other neural network-based VR applications but rather to give you an idea of the steps involved for this type of problem.

A number of components are necessary in order to implement and perform recognition of characters while in VR:

In the diagram, there are two boxes for the HTC Vive and Unity.

The box on the left is a scene that reads a task dictionary and chooses one task randomly for the user to perform. This use case is just to write a letter of the alphabet, but other use cases could include drawing a set of symbols or carrying out a set of gestures.

The box on the right is a demonstration scene that sends data that looks like the raw training data to the inference library. The library generates a ranking of the items in the task dictionary that are most likely to be correct along with a score that is closer to 1 as a particular letter becomes more likely. Note that this model accepts upper and lowercase letters as input from the user but always reports lowercase letters due to the inability to distinguish letters in isolation that vary only in size like “C” and “c” or “O” and “o” even for a human.

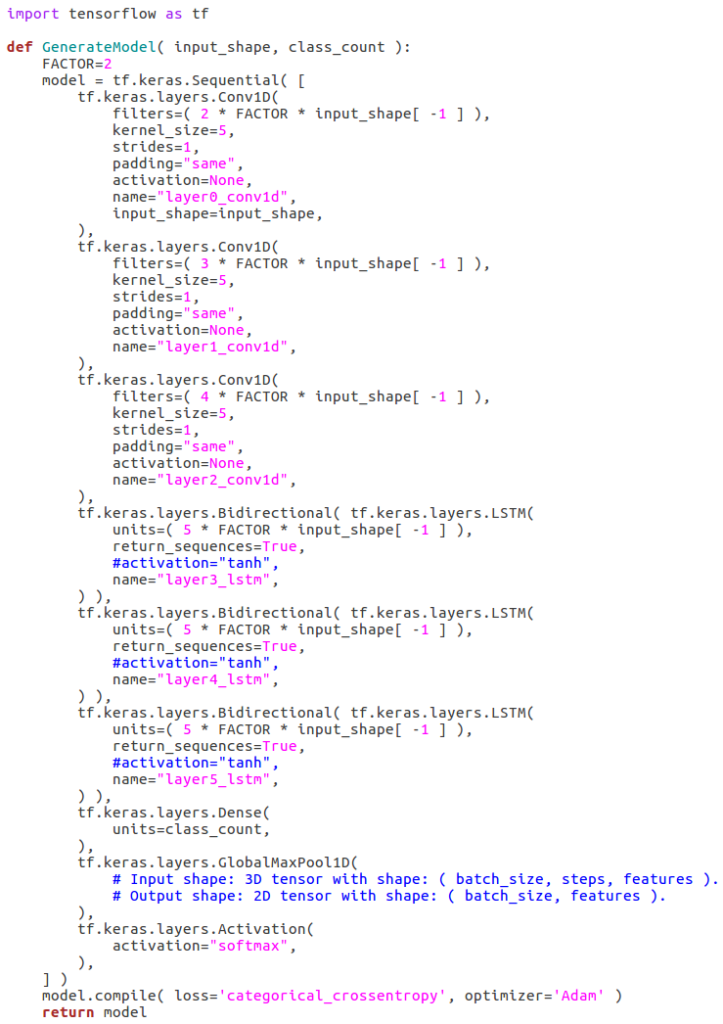

The neural network used for classification is based on the neural network used on Google’s “Quick, Draw!” website. This is described on the TensorFlow website. Below is the entire model. It includes 1-dimensional convolutions to allow it to perform operations on small windows of sequences that can vary in length. These are sent to Long Short-Term Memory cells, a unit used in Recurrent Neural Networks, which allow information in its input sequence to travel in the causal direction (i.e. from earlier in time to later in time) with relatively high fidelity. Putting the LSTM in a bidirectional wrapper allows information at its inputs to travel in the anti-causal direction. At each step, the output of the chain of LSTM operations is passed to a regular dense neural network layer that should return a higher value on an output associated with the letter the user has written. The output of the dense network is pooled together by taking the maximum value of each output of the dense layer over every timestep. Then the outputs are normalized via softmax so that they sum to one and look like an approximated probability distribution.

Even though the neural network used is critical for this application it needs quite a bit of supporting software and work in order to be of use.

In the workflow diagram, the first step was to gather raw data from a scene in Unity and a user drawing characters in VR. However, this raw data is just a log of a continuous stream of data over time since the start of the VR application and is not directly appropriate for neural network training. It contains the following pieces of data.

Initially, the log is 79.1MiB per hour of data but contains useless data points. Those useless data points describe the ink that the user indicated and time points at which the ink isn’t being placed. When the log is split up via the Dicer and these useless data points are dropped, the log comes out to 2175 logs totaling 23.95MiB per hour. The Dicer also performs other important tasks, such as resetting the recorded time for every diced log, translating the controllers and headset positions to the headset’s average position, and rotating the log to a representative orientation of the HMD. All of these normalize the input so the network has less irrelevant information to deal with.

After 100 training iterations, which takes an hour when running on a GPU, the accuracy rate reaches 100% for the training set and 99.77% (or 1 error in 435 items) for the test set. This is roughly 12 minutes, on average, between every error at the rate of the test set. Now that we’ve gotten to the core of the character recognition scheme, we have to put layers around it in order to interact with the user. This is done is by creating a new scene in Unity using a C++ library which links against a build of the TensorFlow C++ library and uses a TensorFlow protobuf representation of the neural network.

Because the model was created in Keras on TensorFlow, it gets saved in an HDF5-formatted file. In order to be useful, the Keras HDF5 version of the model needs to be loaded into Keras and then converted to a TensorFlow binary protobuf-formatted file. And, in order to use that, we create a 64-bit Visual Studio 2017 build of the TensorFlow library at $TENSORFLOW/tensorflow/contrib/cmake which generates 260 libraries, 185 of which we must link against in addition to _pywrap_tensorflow_internal.pyd. We can then make use of the neural network in C++ by loading the model through the TensorFlow library. When passing data to the neural network we have to replicate the behavior of the Dicer in real time. We must provide an interface to the library with a C-like interface in order to stream in the raw data and output the top scores and characters. A useful note for Windows DLLs is that they’re not unloaded from Unity while the application is open even if the scene in Unity is stopped.

In the current state of the implementation, this system works quite well for whoever generated the data used for training because the trained data will look quite a lot like the live application data. However, there are a number of weak points in this implementation.

While TensorFlow is well-known for being user-friendly, there are a number of other frameworks for machine learning. An interesting note is that both Unity and TensorFlow, via TensorFlow.js, and WebVR-enabled browsers, should make it possible to reimplement this for the web. Given that the first section described the shortcomings of the current standard text input methods, a good next step would be to extend the model to handle general text input including languages other than English.

We are excited by the results we were able to achieve with the limited data set we collected in this demonstration and look forward to exploring ways to collect larger amounts of data from multiple users to better train our algorithm. If we are able to achieve good results for a large number of users, we see potential to include our character recognition algorithm into an open-source plugin that could allow gesture-based character entry into a variety of VR applications.

If this type of VR character entry would be valuable to you, please reach out to us. We would like to hear more about your application. 219 Design is a product development consulting company. We accelerate your product development by extending your team, offloading development, or a mixture of both. We are here to help you succeed.

You can reach us at getstarted@219design.com.